The time-series database (TSDB)

Module Introduction

It is important from both operation and planning point of view to be able to collect meaningful operational values (or metrics) from network elements, and track their evolution over time.

Some typical examples are:

-

Tracking the received power level of optical interfaces, to detect early indications of signal degradation (e.g. due to optics degradation)

-

Tracking memory utilization of different processes on each node, to be able to correct memory exhaustion issues before they cause any operational impact.

This capability is especially useful if coupled with a mechanism to generate alerts when anomalies are detected.

As scale grows, it is also crucial to be able to aggreate collected metrics from multiple switches, to get an overall picture of the state of the network.

Some possible examples of aggregated metrics could be:

-

The number of errors or discarded packets on a fabric, typically expressed as a rate (that is, errors per time period)

-

The maximum utilization among all links of a network fabric over an interval.

To this end, RBFS includes the possibility of running locally the Prometheus open-source montoring and alerting software.

This component includes:

-

A time-series database, where collected, time-stamped data can be stored

-

An alerting subsystem that allows to notify operators of metric changes that need attention

-

A federation mechanism that allows selected metrics to be collected from each node and aggregated by a central server

This functionality is inactive by default, but can be enabled from configuration.

Enabling the RTFS time-series database (TSDB) involves these steps:

-

Configuring one or more metrics (that is, specify what needs to be observed and collected)

-

Configuring a metrics profile that specifies how often the metric is to be collected

Once a metric is defined and collected, we can create alerts on them. This means:

-

Defining alerts, that is, define the conditions on the collected values that should trigger an alert

-

Defining alert profiles, which allow an operator to aggregate multiple alerts and specify how often to re-send the alert if still active.

To see this in action, we will follow the steps above, define a simple metric and associate an alert condition to its values.

Before you start the hands-on part of this module, you should load the appropriate configuration and verify that the testbed is up and running by executing the corresponding robot file:

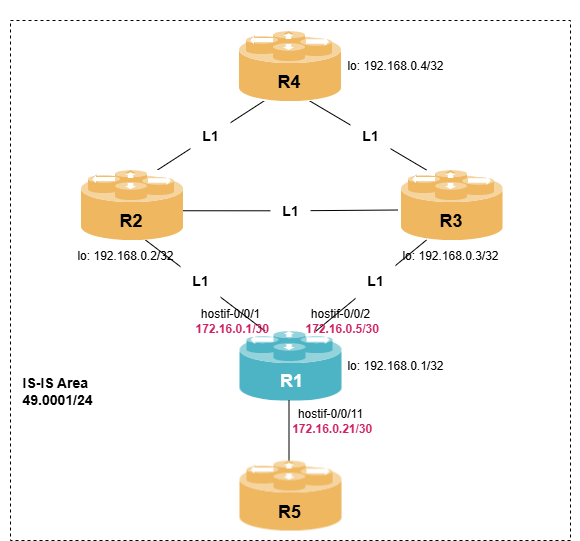

student@tour:~/trainings_resources/robot$ robot tsdb/tsdb_setup.robotTo test the time-series database we will use a simple IS-IS Level-1 setup, and configure metric collection on R1.

Metrics

Metrics can be of two main types: object metrics and index metrics.

We will first define a simple object metric, and associate a profile to it so that it can be collected. Then we will show index metrics, which are slighly less intuitive to configure, but are useful when the values you are interested in are not attributes in a table, but properties of the table itself, such as the number of uninque entries in it.

Defining a simple object metric

Object metrics are defined on values of one or more attributes present on rows on the RTB datastore tables; we will use this type of metric in our first example.

Object metrics values can be of two separate datatypes:

-

counter: used for increasing numerical values (for example, the number of packets transmitted out of an interface, or the number of errors on a link), where we are typically interested in the rate of change (the difference between values over time)

-

gauge: they reflect numerical values that can either increase or decrease (for example, the temperature of a component, the speed of a fan on the switch chassis or the cpu utilization at a given instant).

The first step in our configuration is to define the value we intend to monitor: this means identifying the table in the BDS datastore which contains the values we are interested in, the rows of the table which contains the value and the actual attribute to collect.

Suppose for example that we are interested in tracking the number of IS-IS neighbors in the default instance which are in UP state; first we need to identify where this information is stored in order to collect it.

Use the show datastore isis.appd.1 table global.isis.instance to see which attribute can be used to count the number of IS-IS adjacency in UP state in the default instance.

Click to reveal the answer

supervisor@R1>rtbrick: op> show datastore isis.appd.1 table global.isis.instance

Attribute Type Length Value

instance_name (1) string (9) 8 default

...

level1_neighbor_count (20) uint32 (4) 4 3

level2_neighbor_count (21) uint32 (4) 4 0

...From the output, we can see that a good candidate is the level1_neighbor_count attribute, if we select the row of the datastore table which corresponds to the default instance by using the condition instance_name=default

So to define this metric, we will need to configure:

-

The timeseries name; for example, we can use isis_level1_up

-

The table where the data we are interested in is stored in BDS (in this case,

global.isis.instance) -

The type of timeseries (in this case

object, as we are interesed in the attribute of a specific row in the table) -

The datatype of its value,

counterorgauge. In this case,gauge.

Then, within the table, we need to specify the row we are interested in (the one with instance_name=default), and the attribute (the column in the table) which contains the value we need to monitor.

To do this, we will need two extra configuration statements:

-

A

filterstatement, to select only the row of the table that contains data about default IS-IS instance. -

The name of the attribute we need to monitor which, as we have seen above, is

level1_neighbor_count.

The actual metrics configuration should look similar to this:

"rtbrick-config:time-series": {

"metric": [

{

"name": "isis_level1_up",

"table-name": "global.isis.instance",

"bds-type": "object-metric",

"prometheus-type": "gauge",

"description": "Number of IS-IS Level-1 neighbors in UP state",

"filter": [

{

"match-attribute-name": "instance_name",

"match-attribute-value": "default",

"match-type": "exact"

}

],

"attribute": [

{

"attribute-name": "level1_neighbor_count"

}

]

}

]

}Configure the isis_level1_up metric and commit the configuration.

Once the configuration is committed, verify the current value with the command show time-series metric isis_level1_up.

Then, verify that the actual number of L1 adjacencies matches the collected metric value with the command show isis neighbor instance default.

Click to reveal the answer

switch-mode config

set time-series metric isis_level1_up

set time-series metric isis_level1_up table-name global.isis.instance

set time-series metric isis_level1_up bds-type object-metric

set time-series metric isis_level1_up prometheus-type gauge

set time-series metric isis_level1_up description "Number of IS-IS Level-1 neighbors in UP state"

set time-series metric isis_level1_up filter instance_name

set time-series metric isis_level1_up filter instance_name match-attribute-value default

set time-series metric isis_level1_up filter instance_name match-type exact

set time-series metric isis_level1_up attribute level1_neighbor_count

commit

supervisor@R1>rtbrick: cfg> show time-series metric isis_level1_up

Metric: isis_level1_up

Value: 3 sampled at 11-JAN-2026 20:08:08

Labels:

bd_name : isis.iod.1

element_name : R1

instance : localhost:6002

job : bds_default

pod_name : rtbrick-pod

supervisor@R1>rtbrick: cfg> show isis neighbor instance default

Instance: default

Interface System Level State Type Up since Expires

hostif-0/0/1/0 R2 L1 Up P2P Sun Jan 11 16:34:00 in 26s 2578us

hostif-0/0/2/0 R3 L1 Up P2P Sun Jan 11 16:34:00 in 19s 625837us

hostif-0/0/11/0 R5 L1 Up Broadcast Sun Jan 11 16:33:59 in 20s 830734usDefining a metric profile

Now that our gauge metric is defined, we need to define a metric profile that defines the collection interval, and associate it with our metric.

We can define a profile named every_10_secs which collects data every 10 seconds with this configuration:

switch-mode config

set time-series metric-profile every_10_secs scrape-interval 10sThen we can associate this profile with our metric, commit and go back to operational mode:

set time-series metric isis_level1_up profile-name every_10_secs

commit switch-to-opFrom this point on, the metric will be collected and stored in the time-series database and will be available both to internal alerting subsystem, and for collection and aggregation by a centralized Prometheus instance.

Defining an index metric

Unlike object metrics, index metrics are not meant to capture the values of attributes the rows of the RtBrick Datastore tables; specifically, they count the number of object in an index defined on a table.

Index metrics are less intuitive than object metrics, as their value depends on how tables are indexed in order to efficiently retrieve single rows.

To address this complexity, we will show examples one of the most common and useful use case for index metrics: tables where an index metric is equivalent to the number of object in the table (the row count).

Let’s consider for example the table that stores the set of active IPv4 routes in the default instance, that is, the routes actually installed and used to forward IPv4 packets: the table default.ribd.fib-local.ipv4.unicast

Try running the command show datastore ribd table default.ribd.fib-local.ipv4.unicast, just to get some insight on how routes are stored internally.

It is interesting from operations point of view to monitor the size of this table in the table, to prevent resource issues (that is, to make sure the number of active routes is well within the system hardware capabilities).

First, let’s check which indexes are defined on the default.ribd.fib-local.ipv4.unicast table.

Use the show datastore ribd table default.ribd.fib-local.ipv4.unicast summary to check which indexes are available on the table.

Pay special attention to the Index column, which holds the index name, and the Active column, which holds the number of active entries in the index.

Click to reveal the answer

supervisor@R1>rtbrick: cfg> show datastore ribd table default.ribd.fib-local.ipv4.unicast summary

Table name: default.ribd.fib-local.ipv4.unicast Maximum-sequence: 105 Table ID: 96

Index Type Active Obj Memory Index Memory

sequence-index bds_rtb_bplus 17 2 KB 1 KB

gc-index bds_rtb_bplus 0 0 KB 0 KB

primary radix 17 2 KB 1 KB

nh_rib_map_index libtrees-bplus 17 2 KB 1 KBThe primary index (of type radix) is used to do longest-prefix lookup on the IPv4 routing table, and is a good candidate for our metric: the active-entry-count of this index will match the number of active IPv4 unicast routes.

Now we have all information we need, and we can define our index metric on the default.ribd.fib-local.ipv4.unicast table.

If we name our metric ipv4_unicast_routes, the definition (under the time-series hierarchy) should look similar to this:

"rtbrick-config:metric": [

{

"name": "ipv4_unicast_routes",

"table-name": "default.ribd.fib-local.ipv4.unicast",

"bds-type": "index-metric",

"prometheus-type": "gauge",

"index-name": "primary",

"description": "Number of active IPv4 routes in the default instance",

"attribute": [

{

"attribute-name": "active-entry-count"

}

]

}

]

Define the ipv4_unicast_routes index metric with the parameters above, and commit the configuration. Verify that the metric is configured and its actual value with

show time-series metric ipv4_unicast_route

Finally, confirm that the collected value is correct by comparing it with the output of the CLI command

show route ipv4 unicast summary

Click to reveal the answer

set time-series metric ipv4_unicast_routes

set time-series metric ipv4_unicast_routes table-name default.ribd.fib-local.ipv4.unicast

set time-series metric ipv4_unicast_routes bds-type index-metric

set time-series metric ipv4_unicast_routes prometheus-type gauge

set time-series metric ipv4_unicast_routes index-name primary

set time-series metric ipv4_unicast_routes description "Number of active IPv4 routes in the default instance"

set time-series metric ipv4_unicast_routes attribute active-entry-count

commit

supervisor@R1>rtbrick: cfg> show time-series metric ipv4_unicast_routes

Metric: ipv4_unicast_routes

Value: 17 sampled at 16-JAN-2026 17:26:22

Labels:

bd_name : ribd

element_name : R1

instance : localhost:5512

job : bds_default

pod_name : rtbrick-pod

supervisor@R1>rtbrick: cfg> show route ipv4 unicast instance default summary

Instance: default

Source Routes

arp-nd 3

direct 7

isis 7

Total Routes 17Alerts

Up to now we have seen how we can define metrics and specify how often they are collected and stored. The next step is to define alerts and alert conditions, to notify the network operation teams of potential issues that need to be investigated if collected metric values deviate from their normal values.

Finally, we will see how alerts can be grouped together, and how you can specify the interval at which active alerts need to be re-sent to prevent them from being missed.

Defining alerts on metrics

Once metrics are defined and are being collected, it is possible to define alerts triggered by conditions on their values.

An alert configuration contains, among other things:

-

An expression, in the PromQL language, describing the conditions that trigger the alert

-

A duration, specifying the time the condition needs to be true in order to trigger the alert. This is useful in order to suppress transient conditions, if desired.

-

An interval, that specifies how often the triggering expression is evaluated

-

An alert group, which can be used to aggregate alerts

-

Metadata such as a description, a summary and annotations

-

A label, which are name-value pairs that can be used to categorize alerts and map them to alert profiles

it is useful to define a label repeat_interval with value that express a duration, and use this label to associate an alert profile where the actual duration is specified. Alert profiles will be discussed later on this guide.

|

As a simple example, we can define an alert named isis_below_three, that is fired if the number of is-is neighbors drops below three over any 10-second interval. We can assign a severity level of 2 (critical) to this event, and attach to it a label repeat_interval with a value of 10m, which we will use later on when we define the alert-profile.

The alert configuration should look similar to this:

{

"rtbrick-config:alert": [

{

"name": "isis_below_three",

"group": "routing",

"for": "10s",

"interval": "1m",

"expr": "max_over_time(isis_level1_up[10s]) < 3",

"level": "2",

"summary": "This is a simple test alarm",

"description": "Less than 3 IS-IS neighbors are in UP state",

"labels": [

"repeat_interval:10m"

]

}

]

}Configure the isis_below_three alert with the parameters described above; remember that alerts are configured in the time-series hierarchy.

Then, commit the configuration.

Click to reveal the answer

set time-series alert isis_below_three

set time-series alert isis_below_three group routing

set time-series alert isis_below_three for 10s

set time-series alert isis_below_three interval 1m

set time-series alert isis_below_three expr "max_over_time(isis_level1_up[10s]) < 3"

set time-series alert isis_below_three level 1

set time-series alert isis_below_three summary "Less than 3 IS-IS UP neighbors"

set time-series alert isis_below_three description "Less than 3 IS-IS neighbors are in UP state"

set time-series alert isis_below_three labels repeat_interval:10m

commitTo simulate a failure and trigger the alarm, we can disable one of the three IS-IS neighbors in the configuration.

set instance default protocol isis interface hostif-0/0/1/0 level-1 adjacency-

disable true

commitAfter committing, let’s verify the state of alarms by running show time-series alert.

Remember the condition is triggered after 10 seconds; after this, you should see the alert transitioning to pending and then firing

supervisor@R1>rtbrick: cfg> show time-series alert

State Since Level Summary

firing 13-JAN-2026 11:30:16 Critical Less than 3 IS-IS UP neighborsYou can get the details of any of the active alarms by specifying their name; this will also tell you when the alarm was first fired:

supervisor@R1>rtbrick: cfg> show time-series alert isis_below_three

Alert: isis_below_three

Firing since 13-JAN-2026 11:30:16

Level: Critical

Summary: Less than 3 IS-IS UP neighbors

Description: Less than 3 IS-IS neighbors are in UP state

Labels:

alertname : isis_below_three

bd_name : isis.iod.1

element_name : R1

instance : localhost:6002

job : bds_every_10_secs

pod_name : rtbrick-pod

repeat_interval : 10m

Annotations:

description : Less than 3 IS-IS neighbors are in UP state

level : 2

summary : Less than 3 IS-IS UP neighborsAlert profiles

Alert profiles allows to group several alerts and specify how often notifications should be re-sent if the alert condition persists.

As an example, you could define a profile called hadware-alert that groups several individual alerts that indicate potential hardware issues (for example temperature, fans or power supply issues); once the network operation team has been advised of this, they can then check and troubleshoot the specific problem.

Similarly, you could consider grouping alerts related to individual switch resources (e.g. excessive CPU usage, high memory utilization or low disk space) into a resource-alert group, to warn about issues that could potentially lead to resource exhaustion. Alerts can then be relayed via the CtrlD process to external Network Faut Management Systems, to notify the network operation team of the potential issue before it has any actual impact.

Alert profiles are configured under the time-series configuration hierarchy, and are associate to alerts via the labels attached to each alert.

The real usefulness of alert-profiles is the capability to group alerts to simplify their management; but, just as an example, we can configure a simple alert profile associated to the isis_below_three alert we defined above, and request that the alert is re-sent every 10 minutes if active:

```

"rtbrick-config:alert-profile": [

{

"profile-name": "every_10_mins",

"priority": 2,

"repeat-interval": "10m",

"matchers": [

"repeat_interval=10m"

]

}

]

```The matchers statement is what ties the alert profile to the alert, via the repeat_interval alert label.

Configure the every_10_mins alert with the parameters described above.

Commit the configuration and use the show time-series alert-profiles.

Click to reveal the answer

set time-series alert-profile every_10_mins

set time-series alert-profile every_10_mins priority 2

set time-series alert-profile every_10_mins repeat-interval 10m

set time-series alert-profile every_10_mins matchers repeat_interval=10m

commitEnabling TSDB self-monitoring

Up to now, we have been defining metrics and alerts based on values in the Rtbrick Datastore, that is, on the state of network elements. In this last exercise, we will enable monitoring on the state of the Prometheus monitoring subsystem itself; this will allow us to detect error conditions that could potentially affect metric collection and alerting.

Since Prometheus health metrics are themselves stored in the time-series database, you need to specify how often they are collected (i.e. the scrape-interval).

Once we do this, we will also be able to see statistics about the actual metrics being collected.

You can enable self-monitoring with this configuration:

set time-series self-monitoring enable true

set time-series self-monitoring scrape-interval 30s

commitAfter doing this you should be able to see the list of configured metric profiles and counters for the different Prometheus error conditions using the command show time-series statistics

Remember that after committing you need to wait for 30 seconds in order for the first collection run to populate the database and show error and status counters.

Configure self-monitoring using the configuration described above.

Commit the configuration, then use show time-series statistics to check status and error counters.

Remember that the counters will be visible only after a few 30 seconds.

Click to reveal the answer

set time-series self-monitoring enable true

set time-series self-monitoring scrape-interval 30sRunning the show time-series statistics immediately shows only the metric profile, as the actual database entries will be created only after the first collection run:

supervisor@R1>rtbrick: cfg> show time-series statistics

Scrape Targets

Metric Profile Daemons

No time-series database entry for prometheus_target_scrapes_sample_duplicate_timestamp_total found.

No time-series database entry for prometheus_target_scrapes_exemplar_out_of_order_total found.

No time-series database entry for prometheus_target_scrapes_sample_out_of_bounds_total found.

No time-series database entry for prometheus_target_scrapes_sample_out_of_order_total found.

No time-series database entry for prometheus_target_scrapes_exceeded_sample_limit_total found.

Failed querying the time-series statistics.Waiting ~30 seconds shows both absoulte counters and the error rate on a 5-minute interval

supervisor@R1>rtbrick: cfg> sh time-series statistics

Scrape Targets

Metric Profile Daemons

every_10_secs 1

Error Counters

Name Count Increase (in last 5 mins)

prometheus_target_scrapes_sample_duplicate_timestamp_total 0 0

prometheus_target_scrapes_exemplar_out_of_order_total 0 0

prometheus_target_scrapes_sample_out_of_bounds_total 0 0

prometheus_target_scrapes_sample_out_of_order_total 0 0

prometheus_target_scrapes_exceeded_sample_limit_total 0 0In addition to this, if you try the CLI command show time-series metric followed by the question mark ?, you will see another metric called rtbrick_invalid_metric_config has been automatically created to collect Prometeus errors.

Module Summary

In this short introduction to the time-series database and alerting, we:

-

Described some possible uses of the built-in time-series database (TSDB)

-

Defined step-by-step a simple object metric and an index metric, then used metric-profiles to specify the collection interval

-

Configure alert and alert-profiles on our sample metrics

-

Finally, we enabled self-monitoring to check the health of the Prometheus process itself

While these examples are (for brevity and clarity) very simple, they should serve as an initial foundation to build more complex metric which are specific to your actual operational requirements.

For more information on the time-series database, please refer to the documentation.