Controller Daemon

This chapter provides information about the Controller Daemon (CtrlD) which is a major software component in the RBFS ecosystem. It serves as a proxy to various APIs, including high-level APIs for RBFS configurations.

CtrlD Overview

CtrlD operates on the host OS (ONL) and it is the single entry point to the router running the RBFS software. CtrlD controls and manages most of the tasks and functions in an RBFS ecosystem. You can run multiple instances of the CtrlD on an RBFS device.

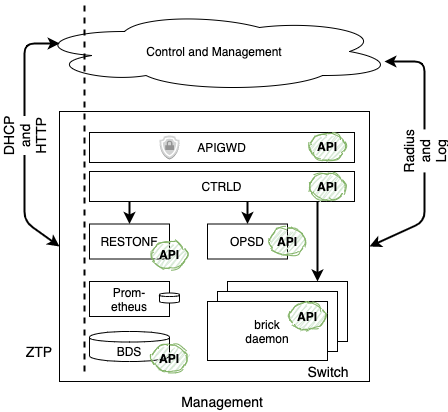

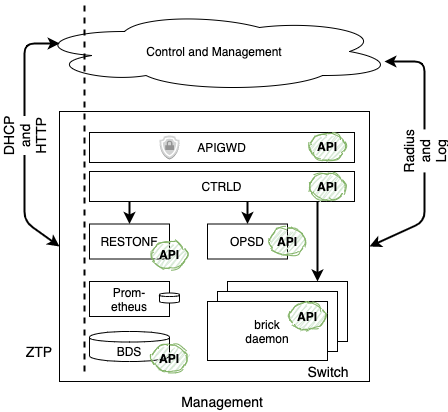

CtrlD is deployed on ONL (Open Network Linux) and acts as an intermediary between the RBFS container in the ONL and external systems. The following illustration presents a high-level overview of CtrlD.

The CtrlD API, implemented by CtrlD, performs multiple tasks such as initiating the container and rebooting the device. In the event of a software upgrade, CtrlD API is used to trigger the upgrade process.

CtrlD also manages the container and controls the interaction between the different external systems such as Graylog. CtrlD uses REST APIs to control and manage the router. CtrlD is also responsible for gathering data from the router and forwarding this information to other systems.

CtrlD plays different roles in an RBFS ecosystem. It is the gateway for all other components interacting within or outside of an RBFS device. CtrlD acts as:

-

Controller for router device running RBFS.

-

Controller for elements in the device, such as LXC containers.

-

Gateway to RBFS images and packages.

CtrlD Parameters

In a production environment, the CtrlD binary starts with default parameters as the rtbrick-ctrld service. To see these default parameters, use the ctrld -h command.

$ ctrld -h

Usage of ctrld:

-addr string

HTTP network address (default ":19091")

-config string

Configuration for the ctrld (default "/etc/rtbrick/ctrld/config.json")

-lxccache string lxc Image Cache folder (default "/var/cache/rtbrick")

-servefromfs

Serves from filesystem, is only used for development

-version

Returns the software version

CtrlD Version

The command ctrld -version displays the installed version of the daemon. The version should be tagged correctly in the repository.

The CtrlD configuration can be located in the JSON file: /etc/rtbrick/ctrld/config.json. Use the cat command to display the file content.

Example:

$ cat /etc/rtbrick/ctrld/config.json

{

"rbms_enable": true,

"rbms_host": "http://198.51.100.77",

"rbms_authorization_header": "Basic YWRtaW46YWRtaW4=", "rbms_heartbeat_interval": 600

}

Container Management

CtrlD serves as the manager for containers. However, the RBMS, an external RBFS management system, is not aware of these containers, necessitating a systematic mapping. Understanding the correlation between RBMS, CtrlD, and Linux Containers (LXC) is important.

RBMS contains various elements, each uniquely identified by a name, representing a running RBFS instance. You can upgrade or downgrade Elements.

Each RBMS Element includes services. These services not only characterize the Element’s functionalities but also encapsulate the services running within it.

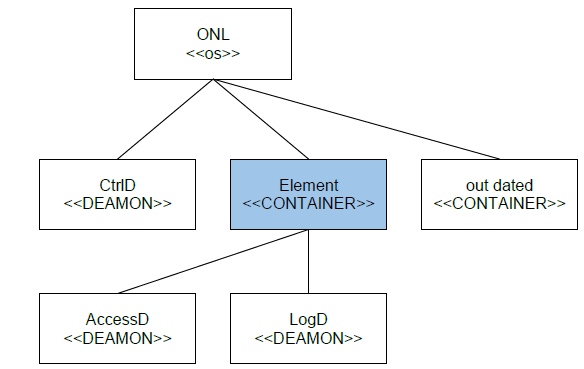

The foundational unit in the model is the 'element container', whether a single element exists on an ONL or not. The following figure illustrates the general structure of daemons and containers within the RBFS service model.

In the event of an Element undergoing upgrade or downgrade, the system automatically preserves the previous version within an outdated container. It enables the recovery of the outdated container, if the upgrade or downgrade fails. By maintaining the backup, it minimizes potential disruptions and safeguards against data loss.

Both 'element' and 'container' are different in RBFS. In RBFS, a container always refers to an lxc-container. However, in a white box environment, a container is denoted simply as rtbrick.

You can configure the element-name and the pod-name of a container in the lxc-container root directory at: /var/lib/lxc/rtbrick/element.confg.

This method offers several benefits:

Updating Container

-

Upgrading or downgrading the containers (for example, upgrading to a higher version

rbrick-v2). -

It is possible to stop the currently running container version 1 and to launch container version 2.

-

It facilitates fast container updates. If an update fails, it can revert to the previous state by stopping the second container and restarting the first one.

Rename Elements

If element.config is not available, default to using the element name as the container name.

Images Management

The images are stored within Open Network Linux (ONL) at /var/cache/lxc/rtbrick. For each image, there is one subfolder: /var/cache/lxc/rtbrick/<image-folder>. You can identify the images based on a set of fields, as described in the following table.

| Field | Description |

|---|---|

organization |

Organization that issued the image as reverse domain name (e.g. net.rtbrick). |

category |

Category which can be used to describe the purpose of the image. (e.g. customer-production) |

platform |

Describes the Hardware Platform. |

vendor_name |

Vendor of the platform |

model_name |

Model of the platform |

image_type |

Image type (for example, LXC) |

image_name |

Image name (for example, rbfs) |

element_role |

Element role the image was built for (for example, LEAF). |

image_version |

Image revision to be activated |

Image Repository

The image folder contains the following files:

-

A

metadata.YAMLwhich identifies the image.

There can also be additional attributes in the file, but the attributes to identify an image have to be in the file.

An example of the RtBrick properties is shown below.

rtbrick_properties:

organization: net.rtbrick

category: customer-production

platform:

vendor_name: virtual/tofino

model_name: virtual

image_type: LXC

role: LEAF

image_name: rtbrick-rbfs

image_version: 19.13.4-master

-

A subfolder named

rootfs -

The

config.tplfile: This file is used to create the configuration file with the respective data in the template.

You can use the following syntax to add a property from the dictionary provided by ctrld.

Therefore, lxc.rootfs.path = dir:{{index . "rootfs”}} results in lxc.rootfs.path = dir:/var/lib/lxc/mega/rootfs

Container and Element Management

LXC Containers are identified as elements if they have a metadata.yaml with the fields described above. These LXC containers can also be revised containers, which are created when an upgrade of a container takes place.

The revised element is named using the element name and a timestamp: revised-{element-name}-{timestamp}.

| It is not possible to rename an element. For more information, see How to rename LXD / LXC container. |

A template engine to update the LXC configuration template is used for the container.

Each container has the files in the /var/lib/lxc/{container-name} folder, as shown in Table 3.

| File | Description |

|---|---|

config.tpl |

Template for lxc configuration |

config.data |

Data which was used to fill the templates (config, hostconfig). |

metadata.yaml |

Information about the image the container was built from. And a lot more information. |

The status of an image can be CACHED or ACTIVE, as described in the following table.

| Status | Description |

|---|---|

CACHED |

This image is on the ONL |

ACTIVE |

This image is on the ONL and is the image used for the actual container instance. |

CtrlD API

CtrlD has been designed with Domain Driven Design (DDD) principles. The model is divided into modules, known as Bounded Contexts in DDD. Similarly, the CtrlD API is organized into these modules. The CtrlD APIs are REST APIs that adhere to level 2 of the Richardson Maturity Model.

You can find an API overview within each running CtrlD instance:

http://<hostname>:<port>/public/openapi/

The CtrlD API was redesigned when ported to the Golang programming language. To ensure extended backward compatibility, a module called AntiCorruptionLayer is introduced to address this issue.

| Some older APIs may soon be deprecated or removed, use them with caution. |

The following table shows the API tags used to group the APIs by their respective modules.

| API Tag | Description |

|---|---|

anti_corruption_layer |

These APIs are deprecated and are only present for older systems to ensure backward compatibility. |

client |

These APIs are not provided by CtrlD, but rather, they are the APIs that a client must provide to use CtrlD’s callback function. |

ctrld/config |

Configure CtrlD. |

ctrld/containers |

Handle LXC containers (start, stop, delete, and list) |

ctrld/elements |

Handle elements (start, stop, delete, upgrade, and config) |

ctrld/rbfs |

Handle calls that come from the RBFS. |

ctrld/images |

Handle all requests regarding RBFS images. (download, delete, and list) |

ctrld/jobs |

Get information about asynchronous tasks. |

ctrld/info |

General information about CtrlD such as version, image, and so on. |

ctrld/events |

For the publish/subscribe sub-model, register for an event and stay informed about events. |

ctrld/system |

Communication with the underlying host system. |

rbfs |

Communication with an RBFS element such as Proxy, File Handling, and so on. |

Jobs and Callbacks

The Jobs API is needed for asynchronous API calls. Asynchronous API calls can be used with a callback, so that the caller is informed when the job is finished, or can be used with a polling mechanism. The Job API polling asks if the job is finished. This is sometimes easier to implement, especially for scripts like robot.

The callback mechanism uses a retry handler. The retry handler performs automatic retries under the following conditions:

-

If an error is returned by the client (such as a connection error), then the retry is invoked after a waiting period.

-

If a 500-range response code is received (except for 501

not implemented), then the retry is invoked after a waiting period. -

For a 501 response code and all other possibilities, the response is returned and it is up to the caller to interpret the reply.